-

Convolutional Neural Networks(CNN)MLAI/DeepLearning 2019. 9. 30. 17:28

1. Overview

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of deep neural networks, most commonly applied to analyzing visual imagery.

CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to all neurons in the next layer. The "fully-connectedness" of these networks makes them prone to overfitting data. Typical ways of regularization include adding some form of magnitude measurement of weights to the loss function. However, CNNs take a different approach towards regularization: they take advantage of the hierarchical pattern in data and assemble more complex patterns using smaller and simpler patterns. Therefore, on the scale of connectedness and complexity, CNNs are on the lower extreme.

They are also known as shift invariant or space invariant artificial neural networks (SIANN), based on their shared-weights architecture and translation invariance characteristics.

Convolutional networks were inspired by biological processes in that the connectivity pattern between neurons resembles the organization of the animal visual cortex. Individual cortical neurons respond to stimuli only in a restricted region of the visual field known as the receptive field. The receptive fields of different neurons partially overlap such that they cover the entire visual field.

CNNs use relatively little pre-processing compared to other image classification algorithms. This means that the network learns the filters that in traditional algorithms were hand-enginerred. This independence from prior knowledge and human effort in feature design is a major advantage.

They have applications in image and video recognition, recommender systems, image classification, medical image analysis, and natural language processing.

2. Description

2.1 Procedure Outline

Step 1: Convolution

Step 2: Max pooling

Step 3: Flattening

Step 4: Full Connection

2.2 Convolution

$$(f*g)(t)\overset{def}{=}\int_{-\infty }^{\infty}f(\tau )g(t-\tau )d\tau $$

Convolution is basically a combined integration of two function and it shows you how one function modified the other

2.3 Stride and Feature detector(Kernel or Filter) and Feature map(Convolved feature or Activation map)

Reduce the size of the input image

2.3.1 Feature detector

The purpose of feature detector is detecting features, certain part of image that are integral

It detect where certain features are in the image

Each Feature detector has a certain pattern on it. The highest number you can get is when the feature matches exactly.

Some information losing

Neural networks they will decide for themselves what's important what's not and it probably won't even recognizable to human eye. You won't be able to understand what those features mean but the computer will decide ant that's the beauty of Neural networks that they can process so many different things and understand without even having that intuition and without having that explanation why they will understand which features are important to them.

2.3.2 Feature

Features is how we see things, is how we recognise things because we don't look at every single pixel in what we see on an image or in real life but look at features which are nose, the hat, the feather, the eyes. we look at features and that's what we're preserving.

2.3.3 Feature map

Feature map helps us preserve what it allows us to bring forward and get rid of all the unnecessary thigns that even as humans we don't process there's so much information going into your eyes at any given time like gigabytes of information if you look at every single dot. And still we're able to process that because we get rid of what is unnecessary we only focus on the important features.

It still preserve the special relationship between pixels which is very important for us.

2.3.4 Non matching case

2.3.5 One matching case

2.3.6 End of Stride

The number 4 in Feature map is when that pattern matches up.

2.3.7 Multi Feature detector processed

Creating multiple feature map using different filter to preserve lots of information and look for certain features and basically the network decides through its training it decides which features are important for certain types or certain categories.

2.4 ReLU Layer(Rectified Linear Unit)

The reason why we're apply the Rectifier is because we want to increase non-linearity in our image or in our Convolutional neural network.

Rectifier acts as that filter or that function which break up linearity

The reason why we want to increase non-linearity in our network is because images themselves are highly non-linear, especially if you're recognizing different objects next to each other or just on this background and stuff like that the image is going to have lots of non-linear elements and the transition between adjacent pixels is often gonna be non-linear. That's because of borders, there's different colors, different elements in your images. But at the same time, when we're applying mathematical operations such as convolution and running this feature detection to create our feature maps, we risk that we might create some linear.

2.5 Pooling(Downsampling)

We have to make sure that our neural network has a property called spatial invariance meaning that it doesn't care where the feastures are located, not so much as in which part of the image because we've kind of take that into consideration with our convolution layer. But it doesn't have to care if the features are a bit tilted, different in texture, closer, further apart relative to each other, and feature itself is a bit distorted. Our neural network has to have some level of flexibility to be able to still find that feature. That is what pooling is all about.

2.5.1 Max pooling

Still preserve feature which is maximum numbers they represent where you actually found the closest similarity to your feature. But by pooling this feature, we are getting rid of 75% of the information that is not the feature which is not the important things that we're looking out for because we're disregarding 3 pixels out of 4 so we're only keeping 25%.

Therefor, we are accounting for any distortion. So for instance, 2 images in which the cheetahs tears' on the eyes are in 1 image a bit to the left or a bit rotated to the left and then in the other one they're a bit how they're supposed to be, the pooled feature exactly same. That's kind of the principle behind it. It preserve features and account for their possible spatial or textural or other possible distortion. In addition ot all of that, we're reducing the size by 75% which is huge, that's another benefit.

Another benefit of pooling is reducing the number of parameters, for instance 75% of above example, go into our final layers of neural network, therefore we prevent overfitting. By disregarding the unnecessary non-important information, we're helping with preventing of overfitting.

2.6 Flattening

When you have many pooling layers with many pooled feature maps, flatten them into this one long column which is sequentially one after the other. And you get one huge vector of inputs for an artificial neural network.

2.7 Full connection

All of above step things which are convolution, pooling, and flattening are added into whole new ANN on the back of that. The fully connected layer, which is also called generally hidden layer, is specific version of hidden layer which is fully connected to other layer. And consist of new feature vector of output of flattening and new feature for this new ANN to even better predict things that we're trying to predict.

Assuming prediction is made and for instance 80% that is a dog, but actually a cat. And then an error is calculated. What we used to call a cost function and we use the mean square error or loss function and use a cross entropy function for that. Those tell us how well our network is performing and we're trying to minimize that function to optimize our network.

Back-propagation and gradient descent are proceeded for adjusting network. The things are usual. the weights in the artificial neural network which is also called synopsis are adjusted and also the feature detectors are adjusted. And above adjusting repeat on and on til network is optimized.

2.7.1 How multiple output works

For instance, in one step forward of output, feature combination output a eyebrow, a big nose, and a floppy ears are firing up, and then it's up to dog and cat neuron to understand what that means for them. That combination indicate for network that it is a dog and throughout these lots and lost of iterations if this happens many times, the dog will learn that these neurons' combination do indeed fire up when the feature belongs to dog. On the other hand, the cat neuron will know that it's not a cat and it will know that this feature is not a cat. the more the cat neuron is gonna ignore this neuron as iteration goes on.

After lots of iteration, the dog neuron learned that this eyebrow neuron and this big nose neuron and this floppy ears neuron, they all seem to really contribute very well to the classification of what it's looking for and which is a dog.

The output neurons learn which of the final fully connected layer neurons to listen to. And that's how the features are propagated through the network and conveyed to the output. And so even though these features, of course don't have that much meaning to them, like floppy ears, or whiskers, at the same time, they do have some distinctive of that specific class. And that's how the network is trained because we also during the back propagation process. we also adjust the feature detectors. So if a feature is useless to the output, it's going to probably be disregarded or replaced with feature as useful.

When an image of a dog pass into network, the values are propagated through our network, we get certain values. They have learned to listen to each the dog and the cat. And listens to some specific combination of neurons and produce each output.

Voting is a term that is used in the final fully connected layer. They get to vote and above samples are their votes. Weights between final fully connected layer and output neurons are the important of their vote. So above purple weights are how the dog neuron views their votes, how much important is it assigns to these neurons. And to those green votes and this is how much importance the cat's neuron assigns to these though to the votes of these neurons. And so these final fully connected layer neurons vote, the dog and the cat based on their learned weights they decide who to listen to and then make their predictions and then hold neural network concludes that this is in first case a dog and second case a cat.

2.8 Summarize

Input image is applied multiple different feature detectors to create feature maps, and this comprise our Convolutinoal layer. Then on top of that Convolutional layer we applied the ReLU to remove any linearity or increase nonlinearity. Then we applied a Pooling layer to our Convolutional layer so from every single feature map we created a Pooled feature map and basically the Pooling layer has lots of advantages. Main purpose of pooling layer is to make sure spatial invariance in our images and also reduce size of our images significantly and avoid overfitting by simply getting rid of lots of data. Then flatten all of the Pooled images into one long vector. Then we input that into a artificial neural network. and Final fully connected layer which performs the voting toward the classes that we're altering. All these parameters are train through forward and backward propagation iterations. Not only neural network weights are trained, but also the feature detectors are trained and adjusted in that same gradient descent process that allows us to come up with the best feature maps. And in the end we get a fully trained convolutional neural network which can recognize images and classify them.

3. Example

3.1 Filter(Feature detector)

3.1.1 Sharpen

3.1.2 Blur

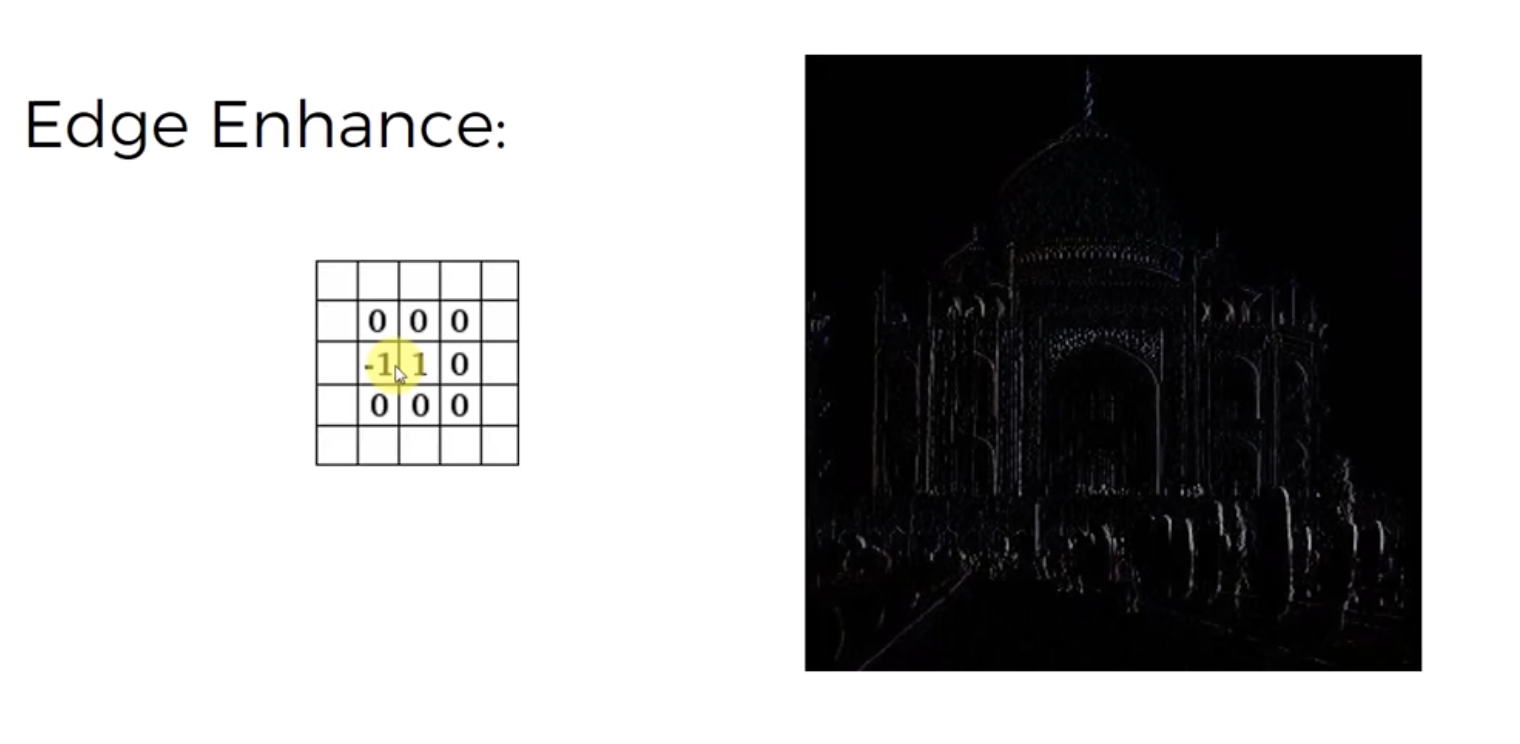

3.1.3 Edge enhance

3.1.4 Edge detect

3.1.5 Emboss

3.2 ReLu Layer

3.2.1 Before feature detector

3.2.2 After feature detector

Feature detector can have negative values in themselves, So sometimes you will ge negative values

3.2.3 After Rectified linear unit function

It removes all the black that anything below zero is turns into zero which means gradual gray progression is gone.

4. References

http://scs.ryerson.ca/~aharley/vis/conv/flat.html

https://en.wikipedia.org/wiki/Convolution

https://arxiv.org/abs/1609.04112

https://arxiv.org/abs/1502.01852

https://www.ais.uni-bonn.de/papers/icann2010_maxpool.pdf

https://pdfs.semanticscholar.org/450c/a19932fcef1ca6d0442cbf52fec38fb9d1e5.pdf

https://en.wikipedia.org/wiki/Convolutional_neural_network

http://vision.stanford.edu/cs598_spring07/papers/Lecun98.pdf

https://adeshpande3.github.io/The-9-Deep-Learning-Papers-You-Need-To-Know-About.html

'MLAI > DeepLearning' 카테고리의 다른 글

Boltzmann Machine with Energy-Based Models and Restricted Boltzmann machines(RBM) (0) 2019.10.19 Classify Deep Learning (0) 2019.10.16 Softmax and Cross-Entropy with CNN (0) 2019.10.16 Artificial neural network(ANN) (0) 2019.10.05 Difference between Deep Learning and Shallow learning (0) 2019.09.25