-

Relationship of Thread, Process, OS, and MemoryModeling/Architecture 2020. 2. 4. 15:13

1. Overview

When we turn on our computer a special program called the operating system is loaded from the disk into the memory. The operating system takes over and provides an abstraction for us the application developers and helps us interact with the hardware and the CPU so we can focus on developing our apps.

All our applications such as the text editor a web browser or a music player reside on a disk in a form of a file just like any other music file image or document. When the user runs an application the operating system takes the program from the disk and creates an instance of that application in the memory. That instance is called a process and is also sometimes called a context of an application.

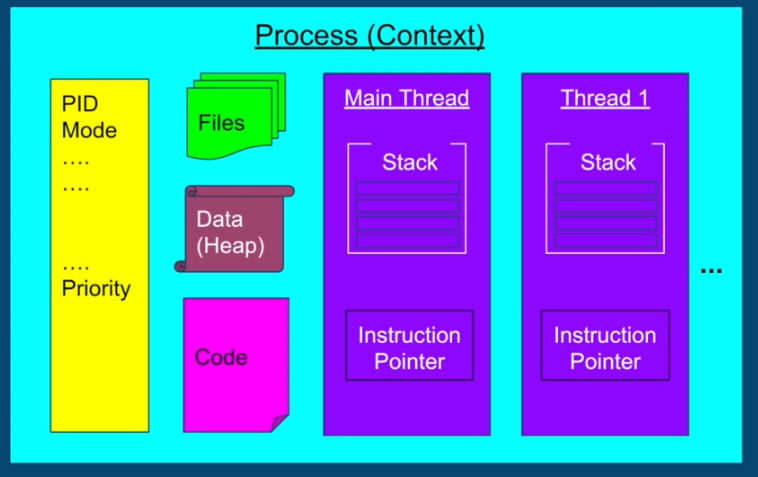

Each process is completely isolated from any other process that runs on the system a few of the things that the process contains are the metadata like the process ID the files that the application opens for reading and writing the code which is the program instructions that are going to be executed on the CPU the heap which contains all the data our application needs and finally at least one thread called the main thread. The thread contains two main things the stack and the instruction pointer.

In a multi-threaded application, each thread comes with its own stack and its own instruction pointer but all the rest of the components in the process are shared by all threads.

2. Description

2.1 Components

2.1.1 Stack

The region in memory, where local variables are stored and passed into functions

2.1.2 Instruction pointer

Address of the next instruction to execute

2.2 Context Switch

Each instance of an application we run runs independently from other processes normally there are way more processes than cores. Each process may have one or more threads and all these threads are competing with each other to be executed on the CPU. Even if we have multiple cores there is still way more threads and course so the operating system will have to run one thread then stop it run another thread stop at and so on.

Stop thread 1 Schedule thread 1 out Schedule thread 2 in Start Thread 2The act of stopping one thread scheduling and out scheduling in another thread and starting it is called a context switch.

- The context switch is not cheap and is the price of multitasking(concurrency)

- Same as we humans when we multitask - Takes time to focus

- Each thread consumes resources in the CPU and memory

- Context switch involves storing data for one thread and restoring data for another thread

- Too many threads - Thrashing, spending more time in management than real productive work

- Threads consume fewer resources than processes. Context switching between threads from the same process is cheaper

2.3 Thread Scheduling

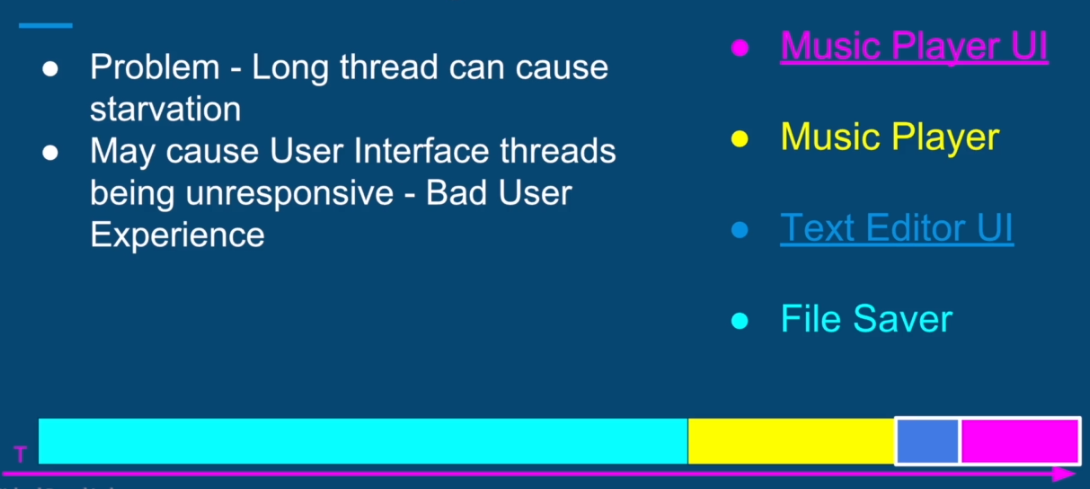

The music player has two threads one is loading the music from the file and playing it to the speakers and the other thread is the UI thread that shows us the progress of the track and response to mouse clicks on the play and stops buttons. The text editor which also has two threads one is again a UI thread showing us what we already typed and also responds to keyboard and mouse events and the other thread runs every two seconds and saves our current word to a file. So for simplicity, we also have one core and we have four threads we need to decide how to schedule on that one core so assuming

2.3.1 First Come First Serve

If a very long thread arrives first it can cause what's called starvation for other threads. This is a particularly big problem for UI threads this will make our applications unresponsive and our users will have a terrible experience

2.3.2 Shortest Job First

This has an opposite problem there are user-related events coming to our system all the time so if we keep scheduling the shortest job first all the time the longer tasks that involve computations will never be executed.

2.3.3 Time Slices

In general, the operating system divides the time into moderately sized pieces called epochs. In each epoch the operating system allocates a different time slice for each thread notice that not all the threads get to run or complete in each epoch.

The decision on how to allocate the time for each thread is based on a dynamic priority.

Dynamic Priority = Static Priority + Bonus (can be negative)

- Static Priority is set by the developer programmatically

- Bonus is adjusted by the Operating System in every epoch, for each thread

- Using Dynamic Priority, the OS will give preference for Interactive threads (such as User Interface threads)

- OS will give preference to threads that did not complete in the last epochs or did not get enough time to run - Preventing Starvation

3. When to use multiple threads, or use Microservices

3.1 When to prefer Multithreaded Architecture

- Prefer if the tasks share a lot of data

- Threads are much faster to create and destroy

- Switching between threads of the same process is faster than switching process

3.2 When to prefer Multi-Process Architecture

- Security and stability are of higher importance

- Tasks are unrelated to each other

4. Reference

https://docs.oracle.com/javase/8/docs/api/java/lang/Thread.State.html

https://developer.ibm.com/tutorials/l-completely-fair-scheduler/

'Modeling > Architecture' 카테고리의 다른 글

Representational state transfer (REST) API (0) 2020.03.08 Resource Sharing, Critical Sections, and Atomic operations (0) 2020.02.27 Stack and Heap Memory Regions (0) 2020.02.26 Difference between Extract, Transform and Load(ETL) and Enterprise Application Integration(EAI) (0) 2019.09.29 Service-Oriented Architecture(SOA) and Microservices Architecture(MSA) (0) 2019.08.30