-

Metric of PerformanceTestMetric 2020. 2. 26. 17:54

1. Overview

Network or Multithreading performance refers to measures of service quality of a network as seen by the customer or modules.

2. Description

2.1 Latency

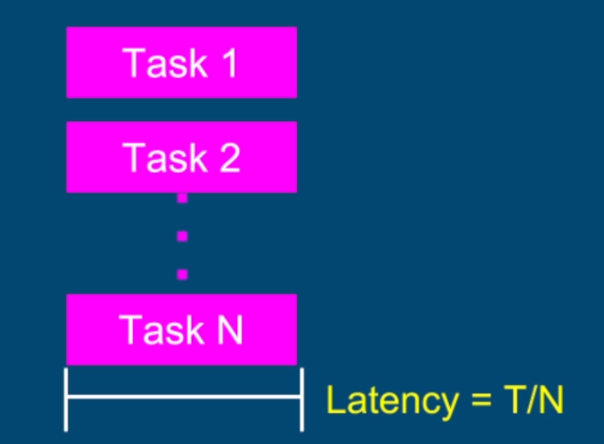

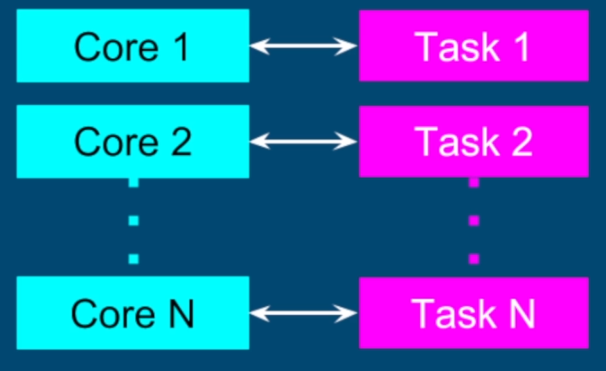

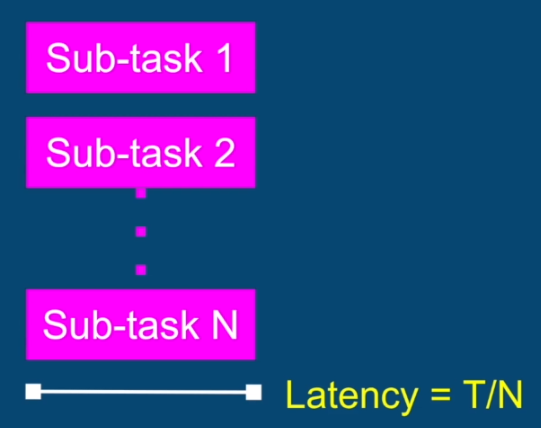

The time to completion of a task. Measured in time units. Theoretical reduction of latency by N = Performance improvement by a factor of N. What is N? How many subtasks/threads to break the original task? On a general-purpose computer, N is the number of cores.

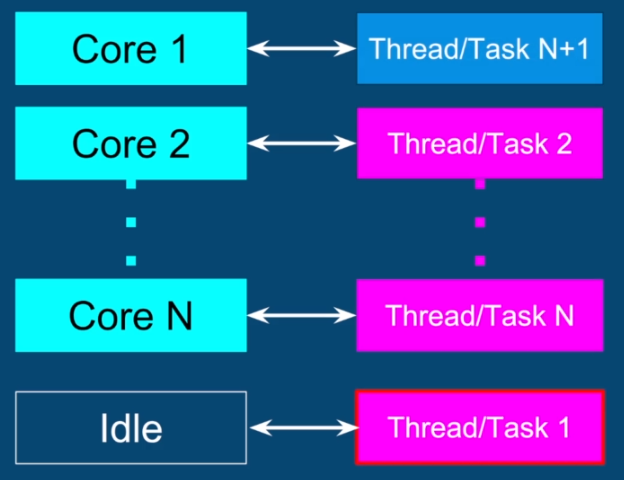

Adding just a single additional thread will be counterproductive and in fact, will reduce the performance and increase the latency that additional thread will keep pushing the other threads from their core back and forth resulting in contexts switching that cause performance and extra memory consumption a few notes before we proceed the formula of number.

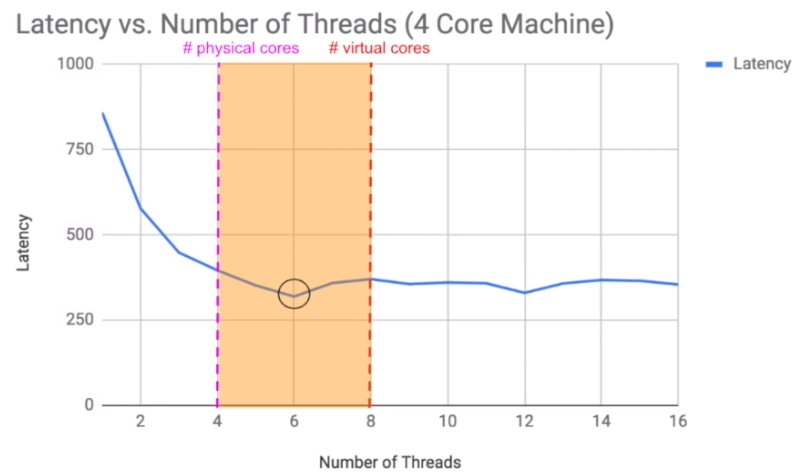

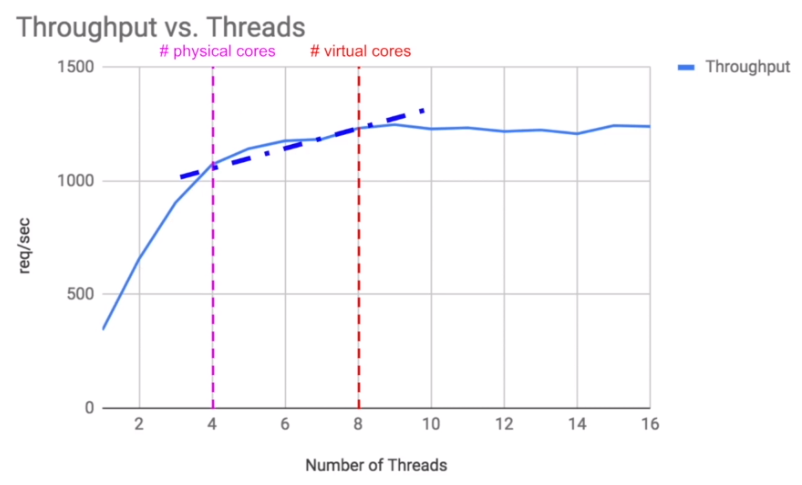

The number of threads equals the number of cores is optimal only if all threads are runnable and can run without interruption which means no I/O blocking calls, sleep, and etc. The assumption is nothing else is running that consumes a lot of CPU.

2.1.1 Hyperthreading

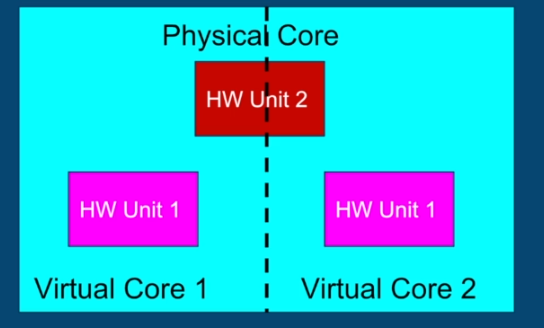

In fact, most computers today use what's called hyperthreading it means that a single physical core can run two threads at a time that is achieved by having some hardware units in a physical core duplicated so the two threads can run in parallel and some hardware units are shared so we can never run all threads 100% in parallel to each other but again we get close to that.

2.1.2 Inherent Cost of Parallelization and Aggregation

- Breaking a task into multiple tasks

- Thread creation, passing tasks to threads

- The time between thread.start() to thread getting scheduled

- Time until the last thread finishes and signals

- Time until the aggregating thread runs

- Aggregation of the sub results into a single artifact

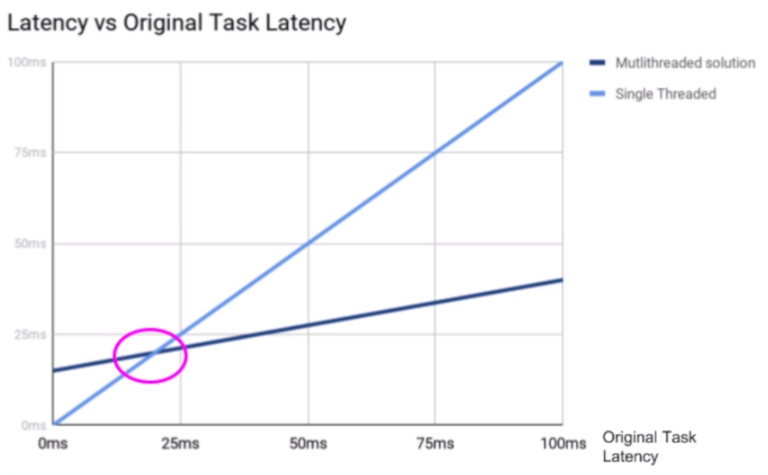

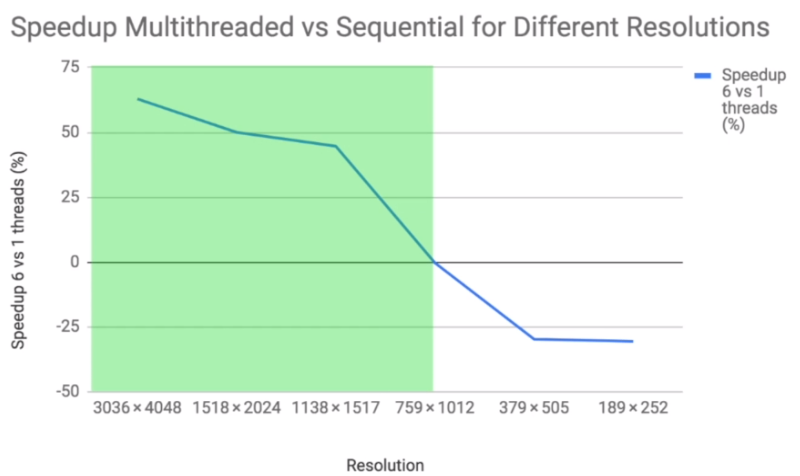

We can see that the multi-threaded solution has a constant penalty we have to pay for any size of the task but the longer and heavier the original task is the more worthwhile it is to break and run it in parallel. And the point where we need to decide if the task is worth parallelizing is that intersection of the multi-threaded solution and the single-threaded solution the key takeaway from this graph is that small and trivial tasks are just not worth breaking and running in parallel.

2.1.3 Example

public class latencyDemo { public static URI SOURCE_FILE; static { try { SOURCE_FILE = latencyDemo.class.getClass().getResource("/many-flowers.jpg").toURI(); } catch (URISyntaxException e) { e.printStackTrace(); } } public static final String DESTINATION_FILE = "out/many-flowers.jpg"; public static void main(String[] args) throws IOException { BufferedImage originalImage = ImageIO.read(new File(SOURCE_FILE)); BufferedImage resultImage = new BufferedImage(originalImage.getWidth(), originalImage.getHeight(), BufferedImage.TYPE_INT_RGB); long startTime = System.currentTimeMillis(); // recolorSingleThreaded(originalImage, resultImage); recolorMultithreaded(originalImage, resultImage, 16); long endTime = System.currentTimeMillis(); long duration = endTime - startTime; File outputFile = new File(DESTINATION_FILE); ImageIO.write(resultImage, "jpg", outputFile); System.out.println(duration); } public static void recolorMultithreaded(BufferedImage originalImage, BufferedImage resultImage, int numberOfThreads) { List<Thread> threads = new ArrayList<>(); int width = originalImage.getWidth(); int height = originalImage.getHeight() / numberOfThreads; for (int i = 0; i < numberOfThreads; i++) { final int threadMultiplier = i; Thread thread = new Thread(() -> { int leftCorner = 0; int topCorner = height * threadMultiplier; recolorImage(originalImage, resultImage, leftCorner, topCorner, width, height); }); threads.add(thread); } for (Thread thread:threads) { thread.start(); } for (Thread thread: threads) { try { thread.join(); } catch (InterruptedException e) { e.printStackTrace(); } } } public static void recolorSingleThreaded(BufferedImage originalImage, BufferedImage resultImage) { recolorImage(originalImage, resultImage, 0, 0, originalImage.getWidth(), originalImage.getHeight()); } public static void recolorImage(BufferedImage originalImage, BufferedImage resultImage, int leftCorner, int topCorner, int width, int height) { for (int x = leftCorner; x < leftCorner + width && x < originalImage.getWidth(); x++) { for (int y = topCorner; y < topCorner + height && y < originalImage.getHeight(); y++) { recolorPixel(originalImage, resultImage, x, y); } } } public static void recolorPixel(BufferedImage originalImage, BufferedImage resultImage, int x, int y) { int rgb = originalImage.getRGB(x, y); int red = getRed(rgb); int green = getGreen(rgb); int blue = getBlue(rgb); int newRed; int newGreen; int newBlue; if(isShadeOfGray(red,green,blue)){ newRed = Math.min(255, red + 10); newGreen = Math.max(0, green - 80); newBlue = Math.max(0, blue - 20); } else { newRed = red; newGreen = green; newBlue = blue; } int newRGB = createRGBFromColors(newRed, newGreen, newBlue); setRGB(resultImage, x, y, newRGB); } public static void setRGB(BufferedImage image, int x, int y, int rgb) { image.getRaster().setDataElements(x,y,image.getColorModel().getDataElements(rgb, null)); } public static boolean isShadeOfGray(int red, int green, int blue) { return Math.abs(red - green) < 30 && Math.abs(red - blue) < 30 && Math.abs(green - blue) < 30; } public static int createRGBFromColors(int red, int green, int blue) { int rgb = 0; rgb |= blue; rgb |= green << 8; rgb |= red << 16; rgb |= 0xFF000000; return rgb; } public static int getRed(int rgb) { return (rgb & 0x00FF0000) >> 16; } public static int getGreen(int rgb) { return (rgb & 0x0000FF00) >> 8; } public static int getBlue(int rgb) { return rgb & 0x000000FF; } }

2.2 Throughput

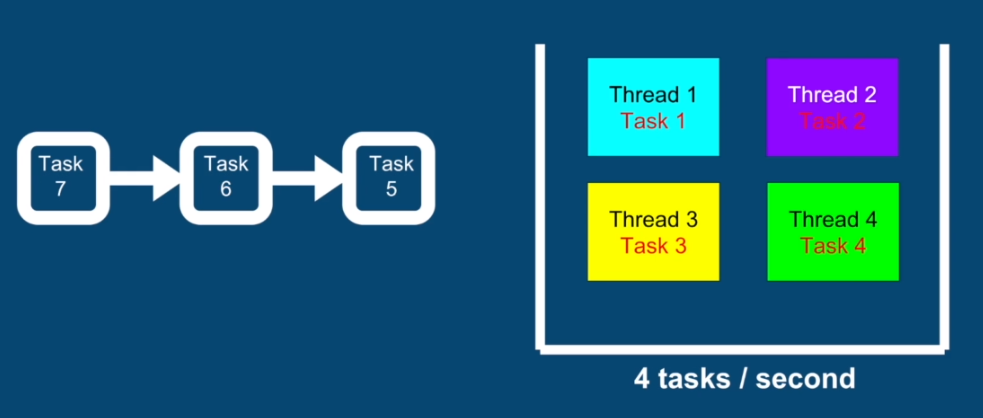

The number of tasks completed in a given period. Measured in tasks/time unit. When we have a program that is given a concurrent flow of tasks and we want to perform as many tasks as possible as fast as possible in that case throughput would be the right performance metric to evaluate our program.

If Latency = $\frac{T}{N}$, Throughput = $\frac{N}{T}$ in theoretical. But in practice, Throughput < $\frac{N}{T}$ , where T is Time to execute original task and N is the number of subtasks or the number of threads or number of cores.

2.2.1 Running Tasks in Parallel

Running tasks in parallel approach would be to simply schedule each task on a separate thread in this case the maximum theoretical throughput is also n over T however in practice we're much more likely to achieve this theoretical throughput. Because preprocessing is breaking a task into multiple tasks and postprocessing which are aggregating thread runs and sub results into a single artifact, and so on.

2.2.2 Thread polling

Using a Fixed Thread Pool, we maintain a constant number of threads and eliminate the need to recreate the threads. Significant performance improvement which is N times faster.

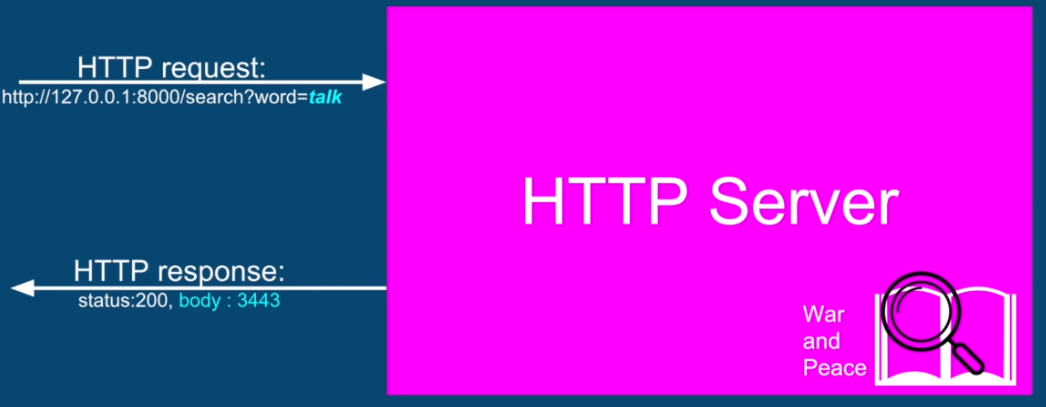

2.2.3 Example

3. Reference

https://www.comparitech.com/net-admin/latency-vs-throughput/

https://en.wikipedia.org/wiki/Network_performance

https://www.vividcortex.com/blog/throughput-is-the-one-server-metric-to-rule-them-all

'TestMetric' 카테고리의 다른 글

Unit Testing vs Integration Testing (0) 2020.02.23 Guideline for testing performance (0) 2019.09.03 JUnit (0) 2019.09.01 TDD processing with examples (0) 2019.08.27