-

MapReduce Vs Spark RDDDistributedSystem/Spark 2019. 9. 25. 08:16

1. Overview

MapReduce is widely adopted for processing and generating large datasets with a parallel, distributed algorithm on a cluster. It allows users to write parallel computations, using a set of high-level operators, without having to worry about work distribution and fault tolerance. But Data sharing is slow in MapReduce due to replication, serialization, and disk IO. Most of the Hadoop applications, they spend more than 90% of the time doing HDFS read-write operations. Recognizing this problem, researchers developed a specialized framework called Apache Spark. The key idea of spark is Resilient Distributed Datasets (RDD); Compare MapReduce and Spark RDD at point of data sharing.

2. Description

2.1 Compare data sharing between MapReduce and Spark RDD

Architecture MapReduce Spark RDD Stored at External stable storage system(ex. HDFS) RDD(In-memory) Performance Slow 10 to 100 times faster than MapReduce Associated process - Replication

- Serialization

- Disk I/O

- Data sharing in memory

- Transform RDD

- Support for persisting RDD on disk, or replicated across multiple nodes

2.2 Data Sharing using MapReduce

The only way to reuse data between computations(Ex. between two MapReduce jobs) is to write it to an external stable storage system(Ex. HDFS). Both Iterative and Interactive applications require faster data sharing across parallel jobs. Data sharing is slow in MapReduce due to replication, serialization, and disk IO. HDFS read-write operations spend more than 90% of the time of whole processing.

2.2.1 Iterative Operations on MapReduce

- Reuse intermediate results across multiple computations in multi-state applications.

- Incurs substantial overheads due to data replication, disk I/O, and serialization

2.2.2 Interactive Operations on MapReduce

- Each query will do the disk I/O on the stable storage which can dominate application execution time

2.2.3 Data sharing using Spark RDD

The key idea of Spark is Resilient Distributed Datasets(RDD). It supports in-memory processing computation. This means it stores the state of memory as an object across the jobs and the object is sharable between those jobs. It is 10 to 100 times faster than network and Disk.

- Iterative Operations on Spark RDD

- Store intermediate results in a distributed memory instead of Stable storage Disk

- If the Distributed memory(RAM) is not sufficient to store intermediate results(State of the JOB), then it will store those results on the disk

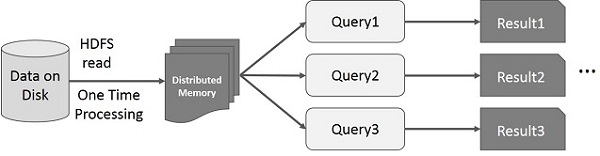

- Interactive Operations on Spark RDD

- If different queries are run on the same set of data repeatedly, this particular data can be kept in memory for better execution times

- By default, each transformed RDD may be recomputed each time you run an action on it

- Also persist an RDD in memory, in which case Spark will keep the elements around on the cluster for much faster access

- Also, support for persisting RDDs on disk, or replicated across multiple nodes

3. References

https://www.tutorialspoint.com/apache_spark/apache_spark_rdd.htm

'DistributedSystem > Spark' 카테고리의 다른 글

RDD Lineage and Logical Execution Plan (0) 2019.09.25 Difference between RDD and DSM (0) 2019.09.25 Apache Spark (0) 2019.09.20 Resilient Distributed Dataset(RDD) (0) 2019.09.08