-

Hierarchical Clustering and DendrogramsMLAI/Clustering 2020. 1. 22. 06:17

1. Overview

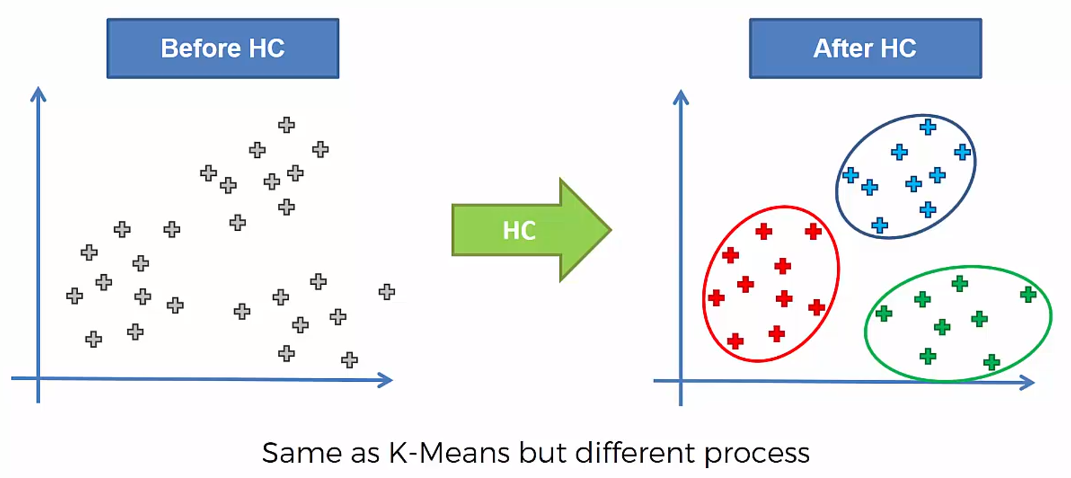

If you have points on your scatterplot or data points as we looked at previously this is a two-dimensional space. If you apply a hierarchical clustering or just say H.C. for short. What'll happen is you will get clusters again very very similar to Kamins In fact sometimes the result of no results can be exactly the same as like k-means clustering. But the whole process is a bit different.

2. Description

2.1 Procedure

Step 1: Make each data point a single-point cluster that forms N clusters

Step 2: Take the two closest data points and make them one cluster that forms N-1

Step 3: Take the two closest clusters and make them one cluster that forms N-2

Step 4: Repeat Step 3 until there is only one cluster

FIN: the final step is to combine these two remaining clusters because by default they are going to be the closest since there are no other clusters.

2.2 Measure of similarity

How you measure the distance between clusters because that can really affect your algorithm if using hierarchical clustering.

For the hierarchical clustering algorithm, the distance between clusters is a crucial element and you need to remember what exactly you are setting it to be how you defining it in your approach.

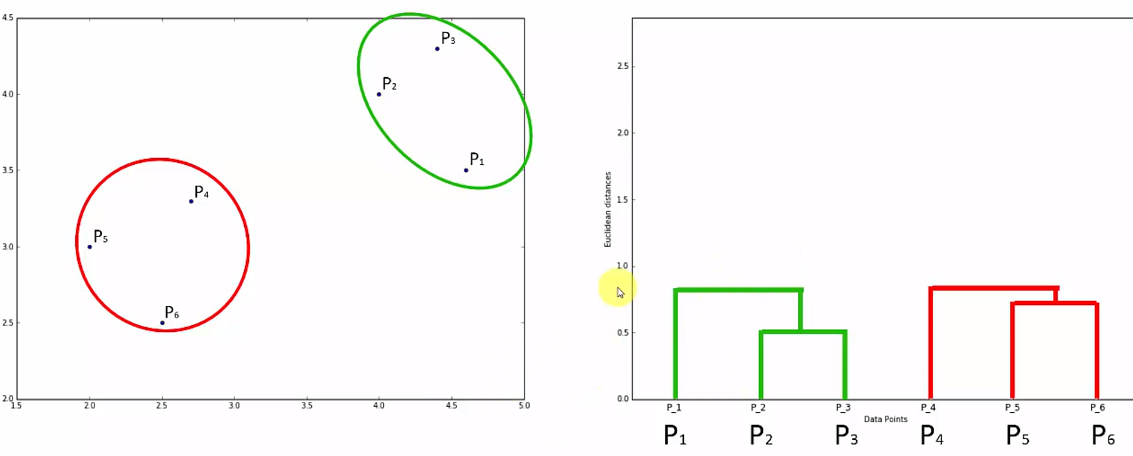

2.3 Clustering using Dendrograms

we don't want within a cluster to have dissimilarity above this threshold so what that will do is will give us specific amounts of clusters.

that will cross this largest distance across that large distance threshold and then use that threshold to calculate the optimal number of clusters and actually find them. So once we've crossed this largest distance with our threshold doesn't matter we said you can sit here as head low or you can set high as long as it crosses this line then. Now the two clusters are this one and this one as you can see that is considered to be one of the approaches or this approach is telling us that the optimal number of clusters and these are them and kind of in this case it makes sense.

2.3.1 Another example

3. Reference

'MLAI > Clustering' 카테고리의 다른 글

Clustering analysis (0) 2019.10.06 K-means clustering (0) 2019.10.05