-

Apache HadoopDistributedSystem 2019. 9. 5. 03:44

1. Overview

Apache Hadoop is a set of software technology components that together form a scalable system optimized for analyzing data. Data analyzed on Hadoop has several typical characteristics.

- Structured: For example, customer data, transaction data, and clickstream data that is recorded when people click links while visiting websites

- Unstructured: For example, text from web-based news feeds, text in documents and text in social media such as tweets

- Very large in volume

- A high rate of speed for creation and arrival

- Following ETL

- Extract: fetching the data from multiple sources

- Transform: convert the existing data to fit into the analytical needs

- Load: Right system to derive value in it

2. Description

2.1 Components

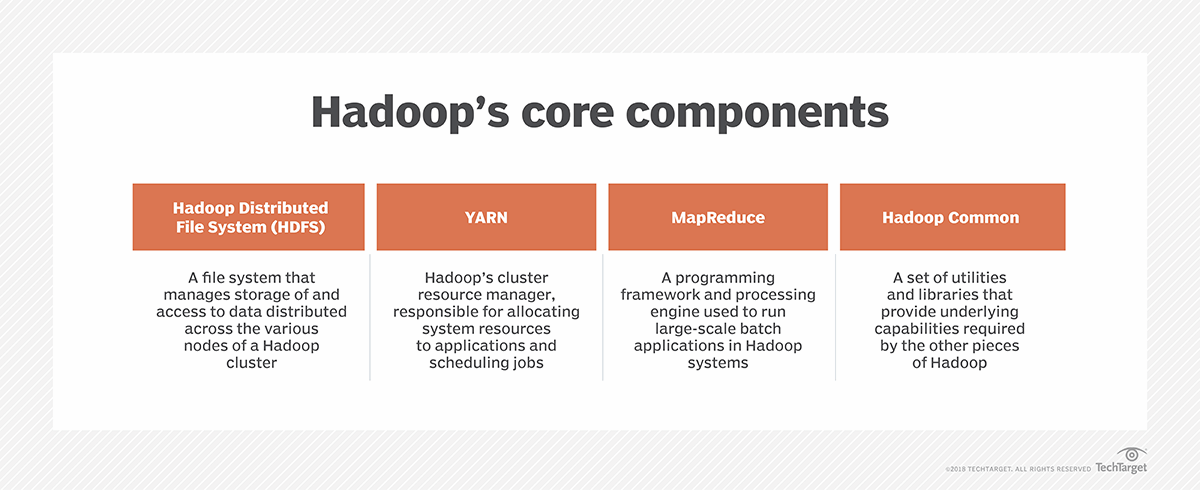

Components Description Hadoop Common Contains libraries and utilities needed by other Hadoop modules Hadoop Distributed File System(HDFS) A distributed file-system that store data on the commodity machines, providing very high aggregate bandwidth across the cluster Hadoop YARN A resource-management platform responsible for managing to compute resources in clusters and using them for scheduling of users' applications Hadoop MapReduce A programming model for large scale data processing

2.2 Categorizing Big Data

Features Description Structured Which stores the data in rows and columns like relational data sets Unstructured Here data cannot be stored in rows and columns like video, images, etc. Semi-structured Data in formal XML are readable by machines and human 2.3 Advantages of Hadoop

- Give access to the user to rapidly write and test the distributed systems

- Automatically distributes the data and works across the machines

- Utilizes the primary parallelism of the CPU cores

- Hadoop library is developed to find/search and handle the failures at the application layer

- A server can be added or removed from the cluster dynamically at any point of time

- Open-source based on Java applications and hence compatible on all the platforms

2.4 Features

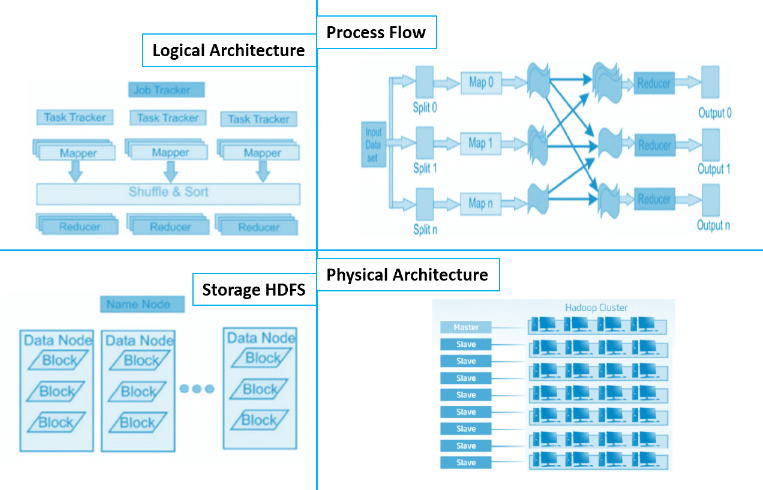

Features Description Distributed Processing The data storage is maintained in a distributed manner in HDFS across the cluster, data is processed in parallel on a cluster of nodes Fault Tolerance - By default, the three replicas of each block are stored across the cluster in Hadoop

- If any node goes down, the data on that node can be recovered easily and automatically from other nodes

Reliability Data can be stored on the cluster of machine despite the machine failures thanks to the replication High Availability Data is available and accessible even there occurs a hardware failure due to multiple copies of data Scalability Providing horizontal scalability which means new nodes can be added on the top without any downtime Economic - Not very expensive as it runs on a cluster of commodity hardware

- Not require any specialized machine for it

- Easy to add more nodes provides huge cost reduction

- Increasing of nodes without any downtime and without any much of pre-planning

Easy to use No need of the client to deal with distributed computing, framework takes care of all the things Data Locality - Works on data locality principle

- Movement of computation of data instead of data to computation

- Client submits his algorithm, then the algorithm is moved to data in the cluster instead of bringing data to the location where an algorithm is submitted and then processing it

3. References

https://en.wikipedia.org/wiki/Apache_Hadoop

https://www.ibmbigdatahub.com/blog/what-hadoop

https://www.bernardmarr.com/default.asp?contentID=1080

https://intellipaat.com/blog/tutorial/hadoop-tutorial/introduction-hadoop/