-

Thompson SamplingMLAI 2020. 1. 22. 08:31

1. Overview

2. Description

2.1 Intuition

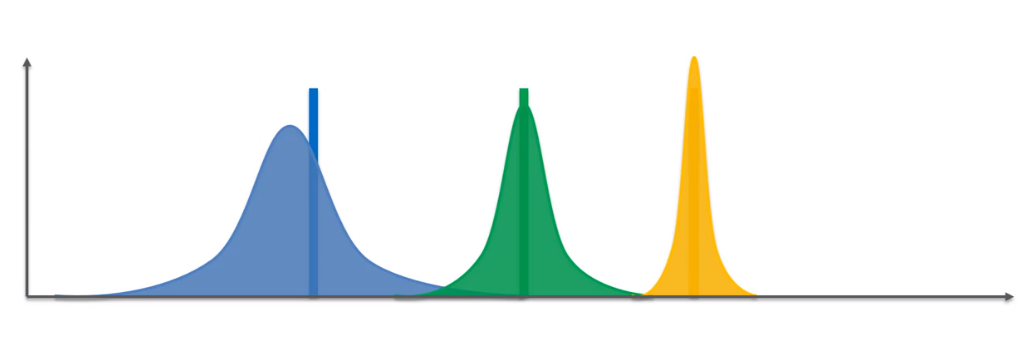

when you get a job description but basically just imagine distribution behind each one of these expected values. So this is just the center of central distribution or the actual expected return from that machine.

the algorithm actually works the algorithm itself doesn't know this information. So this is hidden but it's just there for us so that we can better understand what's going on. So these are the expected returns the actual expected returns of each of the machines. And obviously, if you knew this right away you would say that the machine of the three or the yellow machine is the best one because it has the highest return right. It has the highest return you'd always just bet on this one.

You have no prior knowledge of the current situation or status of things and therefore all the machines are identical. And you have to have at least one or even a couple of trial rounds just to get some data to analyze.

based on those trial runs the algorithm the Tompson something gets them this is where it starts getting different to the upper conditions but we'll construct a distribution right.

And so we're just pulling the arm several times like four times for instance and we're getting some values and that are going to be somewhere around.

the actual meaning of this these distributions is not what you might think at first so these distributions are actually showing us or they're representing not to the degree we're not trying to guess the distributions behind the machine.

We are constructing something completely different something completely out of this world. We're constructing distributions of where we think the actual expected value might lie.

It's very important to understand that so we are creating kind of a if you want think of it that way we're creating an auxiliary mechanism for us to solve the problem.

we're trying to do this create this perception of the world we're trying to mathematically explain what we think is actually going on or what could be going on and that is important also an important thing because this demonstrates that the Tomsen sampling is a probabilistic algorithm.

it's pulling them so according to the distribution right. It's more or less and less likely but still, it can happen that you can see this yellow values actually quite far from the center but it still can happen that it pulled this Valier distribution and it can

So we have created this hypothetical or imaginary batch of mush or not batch imaginary set of machines in our own virtual world where we are saying Okay

what that will do is actually it will give us a values to the machine spit it out or spit out a value but that value is going to be based on the distribution behind this machine where this is the actual expected value of that distribution. So now this information is new information to the algorithm.

So now I have to adjust my perception of the world. Right, so I have a prior probability So these are all for the Green Machine This is my prior distribution.

This is our new information we're going to added in and see where see how that changes our perception of the world our perception of the role has changed. The tradition has shifted a bit and it's become narrower because we have more information we are sample

That is pretty much how the Tomsen sampling algorithm works. And as you can see it is a stick algorithm and every time we're generating these values they are kind of creating this hypothetical set up of the bandits and then we're solving that and then we're applying the results to the real world.

We're adjusting our perception of reality based on the new information that generates and then we're doing that again.

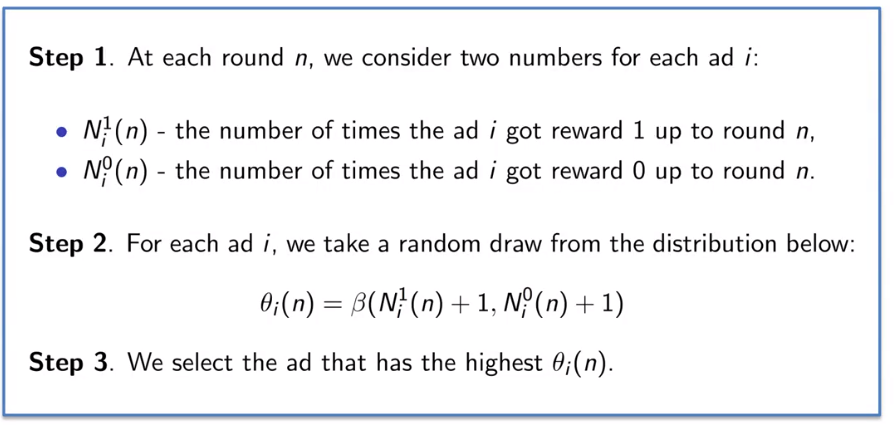

2.2 Procedure

3. UCB vs Thompson Sampling

Quickly compare the two because they do solve the same problem they solve the problem of the multi-armed bandit and let's have a look at some of the pros and cons of each of the algorithms.

3.1 Deterministic vs Probabilistic

3.2 Update

4. Reference

'MLAI' 카테고리의 다른 글

Natural Language Processing (NLP) (0) 2020.01.22 Eclat (0) 2020.01.22 Error Metrics (0) 2019.12.19 Different Machine Learning Categories and Algorithms (0) 2019.09.30